This isn’t WarGames or Why you need to know programming to save AI.

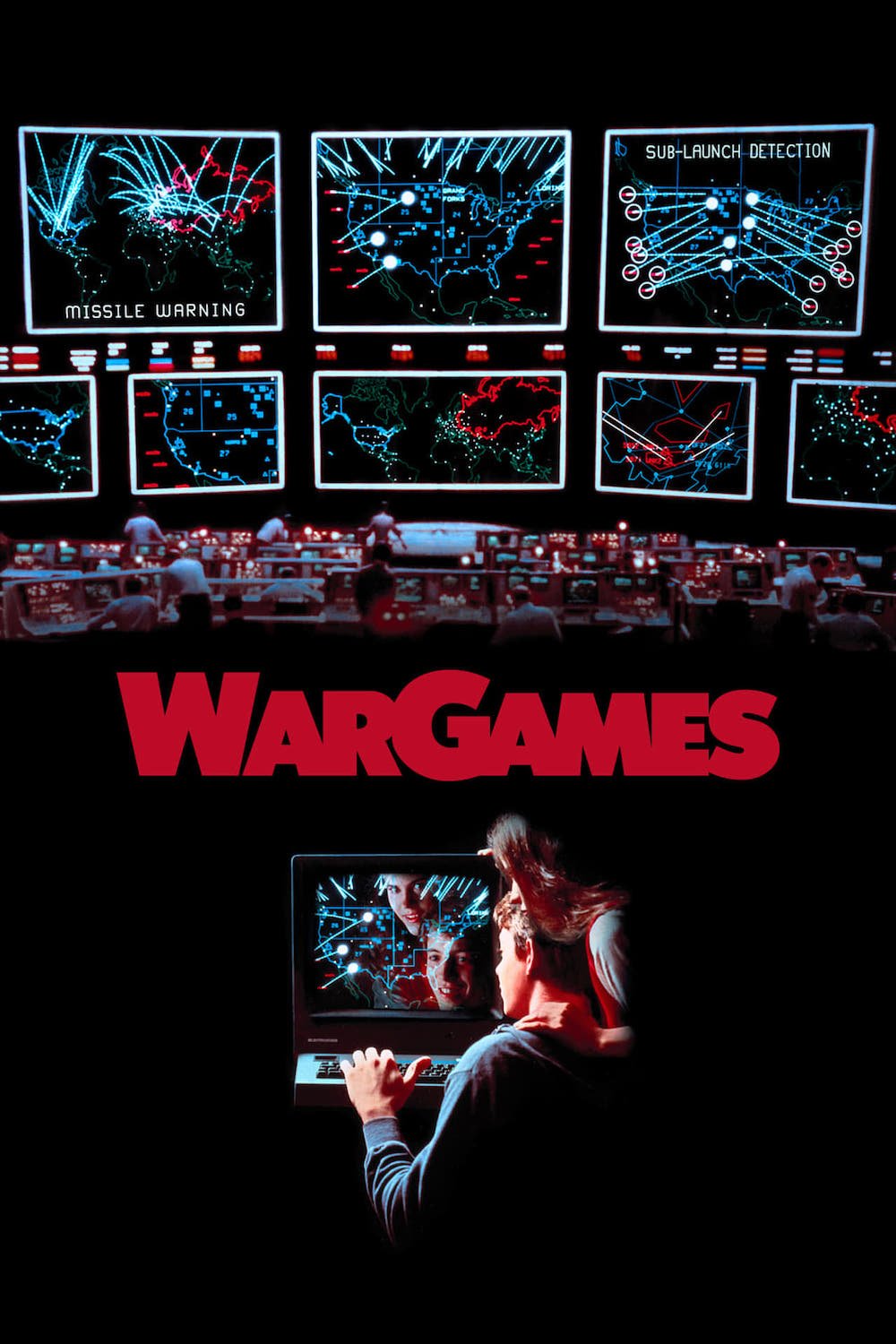

In about 1983, some kids in my school were selected to take an intro to programming class, I think it was 6th grade. I was not selected, I wasn’t the best student. But my friend was and I pestered him for information on the secrets they were learning. This was also the year that “WarGames” with Matthew Broderick came out.

Me: Claude, explain to kids today why WarGames was so cool.*

“WarGames was mind-blowing because it made us feel like computers could give us superpowers to access any system in the world, which was especially exciting when getting online meant weird screeching sounds and most adults thought computers were just fancy calculators. It also really invented the genre of the cool teenage computer prodigy that could outsmart adults*”

(*Actual prompt and response from Claude Sonnet 4 from Anthropic)

Yep, that sums it up kids.

Finally in 1986, I got my real chance to learn, drum roll please, Beginner's All-purpose Symbolic Instruction Code (BASIC). Yep, one of the earliest, simplest languages. The main excitement was that our teacher knew only slightly more than us, so on any given day several of the students would ‘hack’ our little local LAN and try control each other’s computers.

(There was an almost WarGames moment—not WWIII—the changing grades scene—where a few of us were digging around and actually got into part of the school’s grade system. I know that I never changed anything…I don’t know what other’s did with that knowledge.)

Go ahead and rewatch the scene, I’ll wait:

https://youtu.be/U2_h-EFlztY?si=_b16Rp2sk6rkvbYv

When I started college, I decided on a computer science minor, I was, wait for it, a History major, yeah, those two don’t mix well.

Well we started with Pascal headed towards C, I think we had done some Pascal in high school as well and after one year, I was drowning. It wasn’t the fun easy ‘hacking’ that we had done in High School. WarGames this was not.

I remember the end of the first year, finally completing an assignment in the computer lab long after all of the students (no kids, we couldn’t work on this at home 😊). The professor kindly looked it over, made some suggestions and politely said he looked forward to seeing me next year.

Me: I’m not taking year two. I’m dropping computer science as my minor.”

Professor: “ok, well what is your major?”

Me: “Well, it was History, now changing to Rhetoric with a History minor.”

There was a long silence. I don’t know if he had encountered one of us before.

Professor: “well, uh, good luck.”

And that was the end of my programming world for about ten years!

I returned to programming when I started graduate school in the early 2000's. We had to learn Stata, because, well that's what was required for statistics. After a few years I started hearing about R, it was free, it was open source, it did visualizations way better than Stata, and it's what the cool kids used. So I spent a summer crash learning R as I moved into more advanced data work at the end of grad school.

Then I got out into the 'real world' and suddenly all the data analysis was starting to be done in Python. Python had just introduced NumPy, pandas, and very importantly, scikit-learn, a true machine learning algorithm.

The professional world seemed to say, sure, R was nice and all, fine for your personal research projects, but it’s not for ‘real’ business data analysis.

Me: Claude, explain the evolution from STATA, R, Python in one sentence:

“Data analysis programming evolved from an expensive specialized academic activity (STATA) to a free and open research practice (R) to a production-ready machine learning core business capability (Python)”

Well, thanks Claude, you nailed it. Reading that, I realized that pretty much summed up my professional pathway, thanks my AI buddy.

It was quite clear that if I wanted to move to the grown-ups table, I needed to learn Python. So now it was on to another language programming ecosystem! Now I happily stay in Python with occasional dalliance in R for quick data analysis (and even more wandering over to VBA when I've got to solve a client's Microsoft issues--now there's an old programming language!)

Me: Hey Claude, what about VBA?:

“VBA (Visual Basic for Applications) is like the weird cousin at the programming family reunion- it exists in its own parallel universe!”

“weird cousin” I could not have come up with that line, Claude you’ve got a little attitude! And he goes on to say unprompted:

“Modern Reality:

VBA is simultaneously: Outdated, Irreplaceable, Being replaced, and Still growing. New VBA code is written every day because it’s so accessible”

So, yeah, I can write in VBA when I need to, I love it for managing things in Word and Excel. It is indeed the weird cousin, nailed it Claude.

But now with AI, programming will never be the same.

One of the first things I tested with GPT back in 2022 (see “I Went to Sleep…) was having it write some Python code. It was so good. So fast, so accurate.

I now use AI for programming all the time. I haven’t started a code project from scratch in years.

But, But, BUT, yep, there’s a huge conditional here…

I think it is so useful because I already know the programs.

If I had no concept of how programming worked or how different languages work and their ecosystems, I would be 100% relying on AI and it would be right most of the time, but really, horribly wrong a lot of the time.

Now, even if I’m asking it to write code in a programming language I don’t know, I know coding logic and can help plan out the approach and debug for mistakes.

Recently, I wanted something done with google sheets and google forms, so I needed to use Google Apps scripts, which is actually just JavaScript, which I have never bothered to learn.

I told Claude what I needed, showed it an example, and let it go. It gave me a pretty good code, but after testing it wasn’t running right. I asked it to give me more debugging parameters, show it’s work, etc. and it got a bit closer. Still not quite right.

I then stepped through the code myself and I saw the error. Claude had made an assumption about the underlying data structure that was wrong. Now maybe it would have found it’s own error, but probably not.

For fun I kept giving it hints, it kept solving the wrong problem and adding an insane amount of bloat. I closed that chat, opened a new one. Gave it the almost correct code, told it the new data structure and it fixed it in seconds.

So the moral of the story is…always open a new chat window? Sometimes yes.

Really, it’s that AI for coding is best when you already understand the basics of programming and coding.

AI for coding is a fantastic tool and it will always code faster than any human. BUT, it will be even better if you understand coding and programming. Then it becomes a fantastic assistant that you can direct.

So I started with a reference to WarGames which was an early example of AI for many people (future bog post coming). But like the ending of the movie, what we really learned was that the AI computer needed human intervention to direct it.

In the movie, it’s away from nuclear Armageddon, probably your stakes are a bit lower, but AI is always best when we can direct and correct it.